Bioeconomy Policy

The Ethics Of Accessibility: Who Controls The Power In Synthetic Biology?

Balancing the benefits and risks of synthetic biology involves complex decisions about regulation, societal impact, and responsible use of technology

Jun 3, 2024

[DALL-E]

Someone a long time ago said, “With great power comes great responsibility.”

The phrase has persisted, famously via Spiderman, which is pertinent for an article on biosecurity and responsible innovation.

Synthetic biology isn’t planning on making any mutant spider superheroes anytime soon. But when the goal is to be able to predictably engineer life, much like we can model and build an airplane, there’s a lot to think about.

There are tantalizing possibilities to do good. Personalized medicines and cures for rare diseases. Environmentally-conscious proteins. Sustainable plant biofactories.

But there are also potentially nefarious uses. What if the tools we use to cure rare diseases, for example, are turned into weapons?

Then there’s the question of what we ought to do. Just because a certain technology exists, is it always right to use it? Will this technology negatively impact the health of ecosystems or society?

Who gets to decide?

These questions are not always easy to answer. Whether it’s governments, regulatory bodies, scientists, businesses, or consumers, we all have a stake.

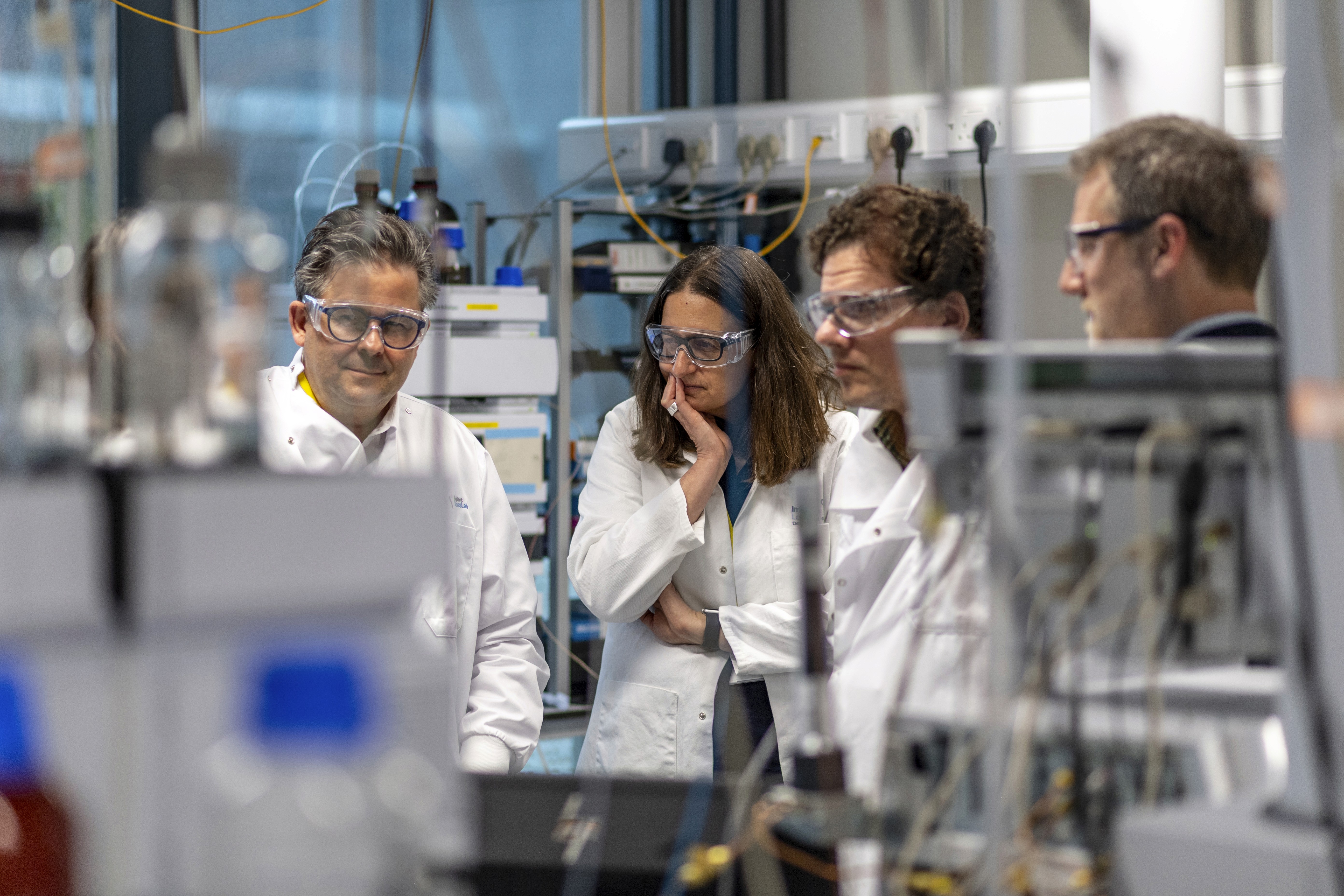

Such an impossibly large and complex topic is difficult to summarize in a single article. But with the help of Sarah R. Carter, who was track chair of Biosecurity and Bioethics at SynBioBeta this year, we’re going to give it a try.

Sarah, thank you for spending some time with us to discuss such a complex issue. What is your background in biosecurity and bioethics?

SC: I am a scientist with a PhD in neuroscience from UC San Francisco. I’ve been working for over fifteen years now on policy issues here in Washington, DC. Almost all of that has been on what we now call the bioeconomy.

From the beginning, I started thinking about this in two tracks. I do a lot on biosecurity, especially on the potential for misuse of tools and capabilities. How do we address that? What does that mean, and what are the responsibilities?

Then, what I call the fun side is the innovation. The policy issues related to advanced biotech, like the regulatory system, responsible innovation and environmental release.

I worked for the Craig Venter Institute for five years and for the past eight I’ve been an independent consultant, working with organizations such as the Nuclear Threat Initiative on issues like DNA synthesis screening.

When a lot of people think about engineering life, they probably think about GMOs. After many decades of often quite fraught debate, what have we learned?

SC: For better or worse, I feel like food jumped right to one of the most challenging intersections of people with technology. Food is the most intimate thing that we have. We put it in our bodies, and it's part of our cultural traditions.

If you’re trying to develop a range of tools and products to integrate into society, that’s a pretty interesting place to start.

But if you think about a lot of synthetic biology products, a microbe is in a bioreactor producing fuels or materials like fibers and plastic. It doesn’t raise as many concerns. In reality, the risks are very low in terms of the impact it could have on the outside world.

More interesting are bioproducts that have intended use in the environment. Those raise really big issues. I spent a good bit of time several years ago looking at gene drive mosquitoes. Understanding the risks of something like that on ecosystems is a real challenge.

But most synthetic biology products are somewhere in between.

How do we regulate technologies like this, when there’s such a broad spectrum of uses? What if I have a synthetic biology product of value to human health, for example.

SC: If you are making a claim that’s medically relevant to a specific disease or condition in the US, you’d need to go through the FDA. There’s a whole lot of oversight and a big regulatory process to it.

If instead you are talking about something as a dietary supplement, or say as a novel device that can tell you things about your gut microbiome, that might fall outside of the FDA’s regulatory process.

Even so, there are measures you can take to make sure that product doesn’t actually do any harm. That’s an important thing regardless of regulatory oversight. To help your customers and the broader public have confidence that your product is safe.

How much say does the consumer have? In the US, GMOs have been widely used for decades. In the EU, however, quite severe regulations mean that they are used far less. Does this have anything to do with consumer pressure, or is it due to differences in the process?

SC: Well, here's the big difference. In the US, we have a science-based regulatory system. Regulatory bodies test if a product has an unreasonable risk to health or the environment. There have been very few concerns that something like Bt corn poses a real risk.

Instead, concerns are about broader issues. Are we going to be using monocultures? Is this messing up the dynamic between farmers and consumers? These are big societal questions.

Activists have railed against the regulatory system, but addressing these big societal challenges is not what it’s for.

In Europe, on the other hand, they’ve integrated more of those societal concerns and included a broader range of stakeholder views. They’ve taken a precautionary approach and ended up in a very different place.

I do think there’s a role in the US for stakeholder outreach and engagement to let people’s concerns be heard. But for me, it should be something different to the regulatory system, which does a really good job of those science-based decisions.

Let’s say someone wants to use synthetic biology for nefarious uses. What’s the worst that could happen?

SC: That's an open question and I’ve been thinking a lot about this. When talking about a global catastrophic risk, some people think along the lines of COVID, for example. Others are thinking of a zombie apocalypse like the Last of Us.

That’s really different from COVID, which was bad, but we got through it.

It’s really hard for me to imagine something as bad as the zombie apocalypse scenario. With synthetic biology, it’s hard right now to imagine someone engineering something worse than you would already find in nature.

How do we stop that sort of thing from happening? Say, someone targeting a group or a population with an engineered pathogen.

SC: Before you even talk about stopping it, you have to understand what it would take to do it.

I did a report with the Nuclear Threat Initiative last year on benchtop DNA synthesis devices. One of the concerns raised is about people having access to all the DNA they want, with the potential to make bioweapons.

We were very careful to say, ok, even if people do have unfettered access, what hurdles would remain? You’d have to take the oligos and stitch them together into genomes. For larger genomes, it gets harder and harder.

You’d need training, the infrastructure and the lab, and the protection from harming yourself. You start to get to some very specific threat models.

One of the only successful attacks of this kind came from Bruce Ivins, with the Amerithrax attacks back in 2001. He was in a high-security military lab at Fort Detrick.

I’m not sure you can prevent people from using the tools available to them to do bad.

No tool is inherently good or bad in and of itself. The same technology can be used - and is being used - for great good.

SC: The same kinds of information that, as we’ve discussed, would help you target a population will also be very useful, for example, for understanding diseases specific to that population.

Personalized medicine, disease risk factors and things, those things are very closely related.

The same is true for basic research and for the broader bioeconomy. Access to synthetic DNA—and all of the different tools and capabilities for engineering biology—has been totally essential to our understanding of how biological systems work. They’re also going to let us find better solutions for climate change and environmental sustainability. So the upsides are very real.

Do we know enough about biology to be really sure we are doing what we ought to? Even if we do, there will be those who say, “Maybe we shouldn’t do this.”

SC: That’s a question everyone should ask themselves.

Going back to the example of gene drive mosquitoes. The discussion there has largely been driven by concerns about the effect on ecosystems, the frogs that eat the mosquitoes, and so on. I’m not sure we know enough about ecosystems to figure it all out with great confidence.

But then there are the people, the public health ministries, who’ve spent the past hundred years trying to eliminate mosquitoes. For them, this might look like a very promising approach.

In my opinion, one thing we don’t know enough about is natural ecosystems. If some synthetic microbes end up in the environment, what would be the effect? Would they persist? What are the long-term factors?

The truth is we should get serious about learning those things because it’s going to matter very soon.

So, considering all this, where does the power lie? Are we all stakeholders?

SC: Yes, for sure.

We’ve spent the last 50 years or so getting to a point where we can predictably and reliably engineer biology to do whatever we want. As we get closer it’s more and more urgent.

Now we have to ask, what do we want? What should we want?

The answers to those questions are very complicated. If you have a truly inclusive stakeholder process, the responses are going to be all over the map. You’ll get different responses from different people.

We need to collectively tackle those questions.