Making machine learning in science an everyday reality

Aug 28, 2019

A few months into my postdoc, an Excel spreadsheet dealt me quite a blow. As I was preparing to perform some statistical analyses, I made a horrifying discovery: some of my sample metadata had been incorrectly merged into a single Excel spreadsheet. The metadata had to be fixed, and all of the preliminary analyses I had done had to be repeated. Sadly, even after fixing my metadata, the dataset was unsalvageable. Not enough samples had been collected and categorical metadata were missing for some samples -- there were no statistical tests I could do to identify any meaningful patterns. What sounded like a really cool project when I started ended up being nothing but a giant headache and colossal waste of time.Unfortunately, my experience isn’t unique. Machine learning, one of the most powerful capabilities brought to humankind through computers, has been used in the life sciences since the 1990s with exciting potential to develop new diagnostics, improved treatments, or perhaps even cures. Yet despite its power, machine learning in the life sciences is still not an everyday reality. The barrier to unlocking the full potential of machine learning isn’t bad tools or a fear of statistics: it’s bad data resulting from poorly designed studies and data systems -- just like my first post-doc project.Poorly designed studies, unqualified assays, and bad data systems lead to undersampled, noisy, unannotated or under-annotated datasets, and datasets that simply aren’t structured appropriately for statistical analyses. This pretty much guarantees poor results when we try to apply machine learning. To make machine learning in science an everyday reality, we need better designed assays that produce higher quality, more usable data. We need to reshape the entire scientific experiment.

Scientific blueprints for success

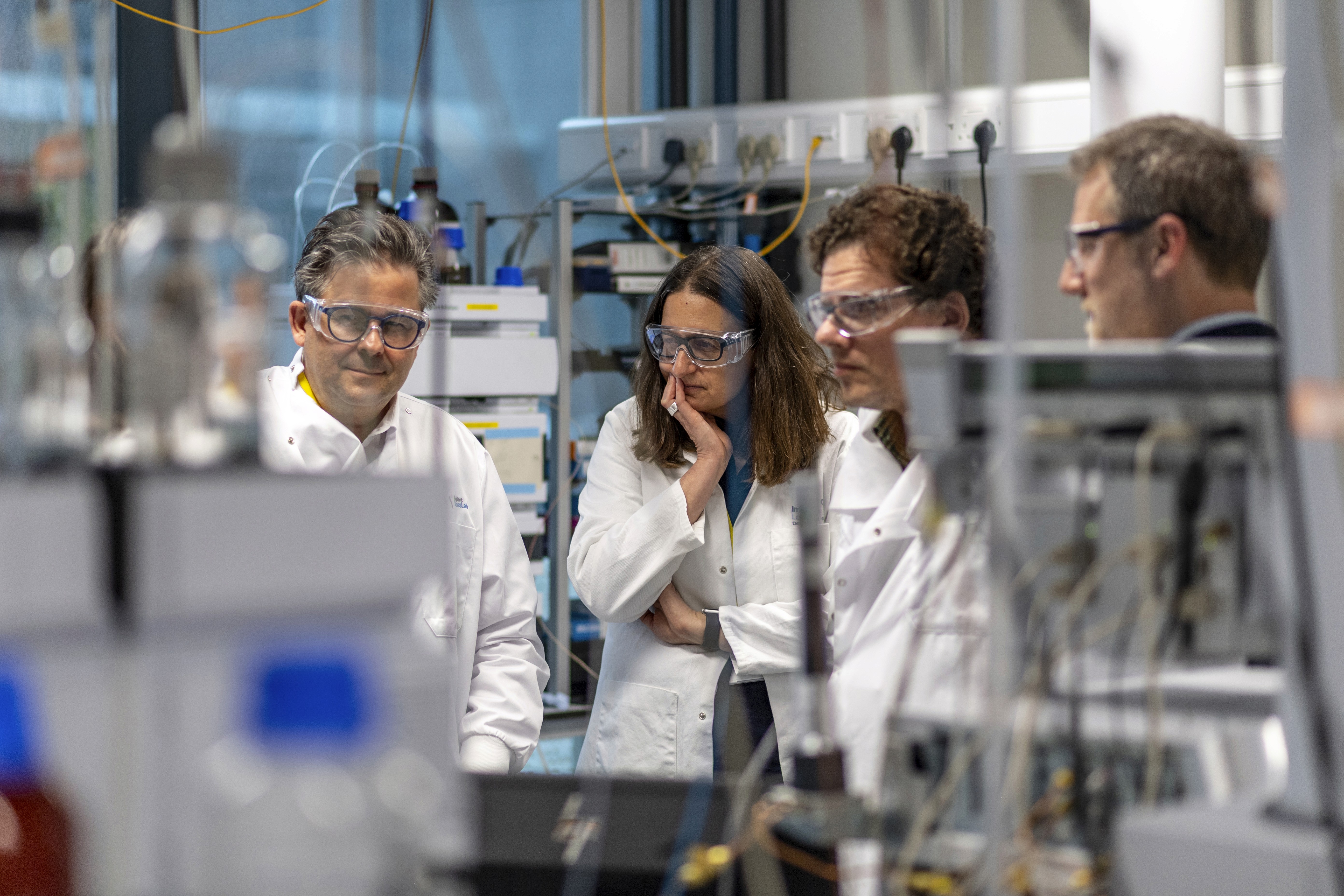

Five years ago, Timothy Gardner, a mechanical and biomedical engineer, had decided he’d had enough of wasted time, money, and effort -- his own and other scientists’ -- and he’d decided to do something about it. He built a company to help people do better science by producing better data. Through a cloud-based software platform, Riffyn uses scientific blueprints to organize people, process, technology, and, of course, data. The approach as a whole has been dubbed functional Business Process Mapping (fBPM) by John Conway, Riffyn’s CCO.“FAIR -- Findable, Accessible, Interoperable, and Reusable -- are the criteria used to answer ‘do I have a good data environment?’ What people forget are all the business and scientific processes that are involved in that, and if you go after things from a point-solution perspective, you're not going to be successful. At Riffyn, we are not only concerned about FAIR data, but FAIR working processes as well,” he says.Gardner explains how it works: “We start by helping the customer to understand their current state and then map a view of the future. We map what you do as executable processes within Riffyn’s system that you can use directly in the lab to run experiments and shape data for analysis. the process has become a visible, transparent representation of what you do that can be put into practice and improved upon, just like a prototype or a blueprint for a mechanical part.” Those blueprints, he says, can be thought of like “source code for science” that can be shared, reused, built upon, and merged.

Riffyn CEO Tim Gardner started his company five years ago to help people do better science by producing better data. Image credit: RiffynThe end result of using Riffyn’s software is rigorously structured data that is instantly ready for machine learning. And, because the software is cloud-based and can handle nearly any input data , it works across people, place, time, and scientific processes. Moreover, it automatically applies the metadata and data linking. A powerful tool for collaborative science -- Riffyn’s VP of Science, Loren Perelman, likens it to the Rosetta Stone of scientific data.

Time for science

I can’t help but think how things might have been different with my post-doc project if I’d used Riffyn’s software. Not only would my metadata problems -- resulting from multiple data sources from multiple collaborators -- have been solved, but I would have been able to do some meaningful statistical analyses and I would saved hours and hours of lost time.Those hours of lost time could be significant. For me, it would have meant wasting less of the lab’s financial resources on the compute cluster I was using or being able to spend more time on a different project. But for companies in the life sciences, the benefits could have an even greater impact.“Handling data right can have an incredible transformation on an organization’s productivity -- I mean, doubling, tripling, quadrupling how fast you make discoveries, and getting things to market five times faster,” says Gardner. All of this, of course, equates to significant financial gains as well.And according to Perelman, the most important result from saving time isn’t the money savings, it’s “freeing up people to do important things” like thinking critically about their data and drawing meaningful conclusions from it, designing the next set of experiments, or figuring out how to help the world benefit from their discoveries. The means more discoveries and more impact from scientific research for the benefit of the world.

A people problem

It seems like an easy sell -- but it’s not as easy as you might think to convince people that Riffyn’s software provides a solution that will propel their lab or company forward.“It’s a people and organizational problem as much as a tech problem,” says Gardner. “People, data, tech, and process all have to work together.”One of the most important things that Riffyn works with customers on is qualifying their assays -- taking the time up front to learn how their assay works, identify and remove sources of noise, and ensure that it’ll yield consistent data before they apply it to their experiment. To most people, that seems like a lot of time investment up front. Add to that the time it takes to learn the software (which Riffyn educates customers on) and implement a lab-wide change in processes -- to many, the hassle just doesn’t seem worth it.But spending a few weeks up front to qualifying your assay is incredibly worth it, says Gardner. He uses a customer as an example.“One of our customers was screening for strains, and every month for four months they sent what they identified as a better strain to the next group in their development cycle -- the next larger scale -- but it didn't work. We helped them look at their historical data sets and, using a set of in-common strains as internal references, we normalized the data across multiple experiments. So what did we find? It turns out that over four months they had not found a single strain that was actually better. What they actually found were temperature deviations and media lots that had better characteristics.”Once they figured out the problem, they immediately designed process corrections and their research program accelerated. Real hits started flowing from their screening pipeline. And most importantly, they got their product to market ahead of schedule.Gardner, Perelman, and Conway all agree that one of the biggest challenges they face is people being resistant to change, or simply lacking the data and process skills they need. Whether it’s simply human nature, a desire for “artisan” science, a fear that computers play too large a role, or no real desire to do things differently than they’ve been done for decades, all three agree that teaching people is an important part of the work Riffyn does. And the positive feedback they get from customers, who are surprised at the deep level of support and scientific interaction provided by Riffyn, lets them know they are on the right track.“The most important thing we do is show people, with their own information and a true solution, that Riffyn is the right path forward. want to do a good job, because the solution is really serious to our customers. People are coming to you with problems they face daily, they're putting their faith in your ability to deliver and we take it really seriously ... we're delivering what we promised to deliver,” says Perelman.And, that Conway says, is one of the keys to Riffyn’s success. They are keeping their promises.“People have tried this in the past, but couldn't get their clients through the transformation process because they didn’t address all the elements: people, process, data and technology. And thus they've wasted their clients’ time. So now those clients are behind, now they don't have the tools -- and they're scared to trust anyone again. That’s what 30 years of scientific software has done to scientific R&D,” he says.But trust can be rebuilt. The Riffyn team has absolute faith in that. Building trust and teaching people about a better way to do science is the mission that the Riffyn team is dedicated to tackling. They’ve already made incredible strides over the past five years, helping customers like Novozymes, BASF, and others accelerate their R&D programs.“In the next five years, Riffyn aims to become the backbone of all data-driven R&D laboratories. This backbone frees scientists to spend their time advancing novel scientific ideas, instead of spending so much time doing data housekeeping. Riffyn takes care of the data problems, the process problems, and the machine learning problems. Our next phase is to build the tool sets that help scientists and labs increase their efficiency in taking ideas all the way to marketed products … so discovering more and inventing more really becomes a reality,” envisions Conway, looking ahead.I am excited to see that prediction become reality. Fortunately, I don’t think I’ll have to wait too long.Ready to rethink your scientific experiments? Meet Riffyn and hear Timothy Gardner speak at SynBioBeta 2019 October 1-3 in San Francisco, CA.