Ai Digital Biology

From Sequence to Function with Protein Language Models

Applying the technology behind large language models to protein sequences is expediting the study and design of proteins

Nov 4, 2024

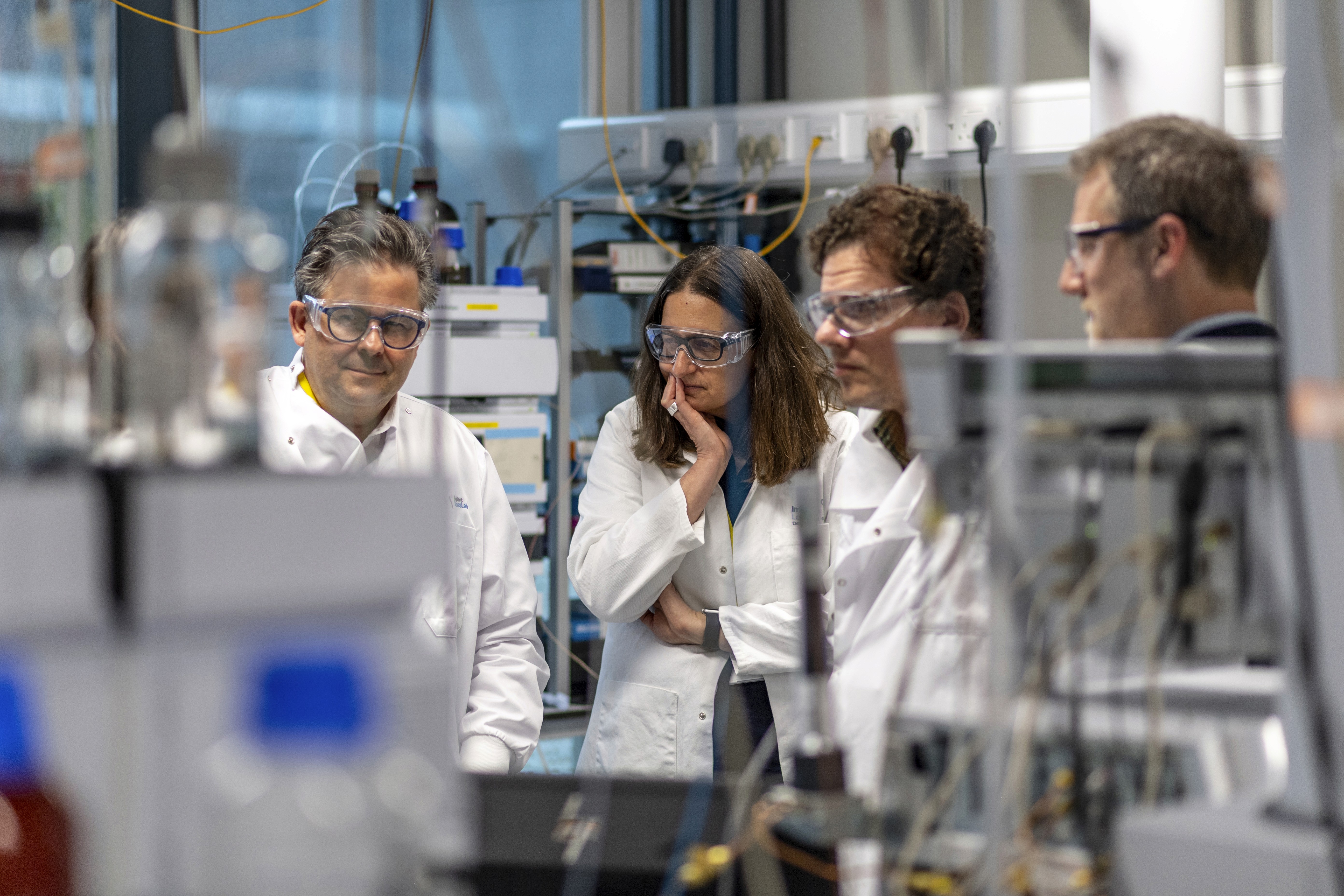

[DALL-E]

Artificial intelligence is having its moment in protein research. The protein folding tool AlphaFold won its developers, John Jumper and Demis Hassabis, the 2024 Nobel Prize in Chemistry. The other half of the prize, awarded for computational protein design, went to University of Washington biochemist David Baker, whose research group has also contributed to AI-based protein structure prediction with RosettaFold.

Input a protein sequence and these models use deep learning to predict how it folds. One of the first steps for both models is constructing multiple sequence alignments (MSA), a good old bioinformatics technique that takes a lot of time. Protein language models (PLMs), a new AI approach to protein research, do not require MSA and are, consequently, faster and more computationally efficient. But they offer more than just speed to protein research.

Modeling Protein Sequences

AlphaFold builds MSAs by querying protein sequences similar to the input sequence. It then analyses evolutionary relationships between the amino acid residues to figure out how the input protein sequence folds. On the contrary, a PLM infers the co-evolutionary relationships between amino acid residues during its training.

PLMs are large language models (LLMs) trained on protein databases. Think of ChatGPT if it was fed protein sequences instead of text. Once trained, LLMs don’t scour through the entirety of their training text to respond to each query. Instead, they generate a response based on statistical associations between letters and words that they learn from the training data.

Likewise, PLMs identify hidden patterns in protein sequences and use them to generate new ones. “PLMs can learn the syntax of protein language or the rules of protein sequences. They essentially capture what are valid protein sequences,” said Claire McWhite, a newly minted assistant professor at the University of Arizona. In other words, they know what is a nonsense protein sequence.

PLMs treat individual amino acids somewhat like LLMs treat individual letters or words. “They get you a robust numeric representation of the identity and context of a particular amino acid in a sequence,” said McWhite. This numeric description of the amino acids enables the use of machine learning on protein sequences.

Not only do PLMs not need MSA, but they can be used to construct them where traditional approaches fail. Take the twilight zone of sequence similarity, for instance, a low level of sequence similarity at which alignments become unreliable. In a study published in Genome Research, McWhite, and colleagues showed that the numeric representation of amino acids in PLMs can be used as an input to a new algorithm for doing MSA.

“They can align regions of the protein, even when the raw sequence has substantially diverged,” said McWhite. The reason PLMs can do this is because they capture the characteristics of each amino acid. This property underscores their utility for a range of use cases in biology.

What Can Protein Language Models Do?

Due to their reliance on MSA, tools like AlphaFold and RosettaFold often struggle with novel proteins that lack similar sequences to compare with. PLMs can predict structures for such sequences as they implicitly infer structural features from sequence data alone. Even for proteins with known homology, PLMs are much faster.

ESMFold, a PLM developed by Meta, was 60x faster than AlphaFold 2 (its next iteration narrowed this gap). Earlier this year, some Meta researchers involved with ESMFold branched out to start an AI company EvolutionaryScale. Its protein language model, dubbed ESM3, simultaneously reasons over sequence, structure, and function to improve the accuracy of predictions.

Most publicly available protein sequences lack functional annotations. Just as with structure prediction, AI models don’t replace experiments, but they can tell researchers where to look. A study published in Briefings in Bioinformatics predicted protein-DNA binding sites from just the protein sequence. In another study published in Nature Genetics, researchers used a PLM to predict the disease effects of all possible missense variants—single nucleotide mutations in genes that produce a slightly different protein—for the entire human genome.

How proteins bind to DNA or what diseases their mutated forms cause are only two of many ways proteins exert their functions. An overarching goal for researchers and companies developing PLMs is to be able to predict function more generally. “You want to be able to interpret genomic sequence or protein sequence and get to the function directly,” said Yunha Hwang, CEO of scientific nonprofit Tatta Bio.

Tatta Bio is working on ML-driven tools that allow users to input a sequence and output a function. “Given an uncharacterized gene or protein, there's no way of deriving any hypothesis or designing any experiments because you don't know what to test for,” said Hwang. Generating possible and plausible hypotheses from sequence data enables researchers to start experimenting with them. In drug discovery, for example, PLMs allow screening a much larger number of protein-drug interactions than conventional in-silico techniques, and they reduce failures during experimental validation.

Companies are also leveraging the power of PLMs to design novel proteins. The problem of protein design is the opposite of the protein folding problem. Say you want to design an antibody that targets a particular diseased cell or an enzyme that is a more efficient catalyst for a particular reaction: What should its sequence be?

“What people want from a model is to input a bunch of parameters or a specification sheet that describes all of the things they want their protein or small molecule or biological entity to do,“ said Kathy Wei, CSO of San Franscisco-based startup 310.ai. The company’s language model turns text prompts into protein sequences with desired functions.

When designing custom proteins, some features that usually matter are thermostability, being able to bind a particular target or avoid another, being functional in mice models if it’s a therapeutic protein, and not looking like patented sequences. PLMs infer how parts of any protein sequence influence these properties and are particularly gaining traction in de novo development.

Beyond designing drugs, PLMs could be leveraged to create novel and more efficient enzymes for a variety of use cases. Demonstrating this capability, a 2023 Nature Biotechnology paper demonstrated a PLM that could generate diverse enzyme families. For example, it produced five lysozyme families that had similar catalytic efficiency but little similarity to natural lysozymes.

Building Foundation Models for Biology

Proteins are only one aspect of the language of life. “Treating human language as sentences made out of words seems to have captured almost anything you can ask for in a language model,” said Wei. “But there's a lot of information that is just not in the protein sequence.”

Parallely, researchers are working on DNA language models, which are LLMs trained on DNA sequences. Since protein sequences and structures are derived from DNA sequences, these models could offer additional insights into how proteins fold and work.

But biology is far more complex than can be captured by either protein or DNA sequences alone. A truly foundational model, as opposed to a PLM trained for a specific task, would be able to take in large, non-unannotated biological datasets and perform well for a wide range of problems. “Nobody knows what that model looks like yet,“ said Wei.

AlphaFold’s success was made possible by decades of biologists contributing experimentally validated structures to the Protein Data Bank. Likewise, advancing foundational models for biology would require large-scale diverse datasets. Wei stressed that looking forward, the limitation with these models will be that the data just doesn't exist. This is why the industry needs to invest in high-quality multimodal datasets for DNA, protein, and RNA sequences, as well as other data forms.

A more immediate challenge for PLMs is their limited context length, a metric that defines the maximum number of tokens an LLM can take as input. For an LLM, it limits the number of words a model can process at once. And if it’s a chatbot, it’s a proxy of how much of the conversation it remembers. The longer this length is, the more complex patterns it can detect.

While the context length of current PLMs is no barrier for predicting protein structures of individual proteins, as they’re not that long, noted Hwang. However, they could miss out on long-range interactions across the genome. These “interactions are present in biological data, and we have evidence that we can capture those with existing methods and scaling,” said Hwang.

When an AI model predicts a protein’s structure or where it binds DNA, it doesn’t reveal what it is looking for. Researchers are trying to peer into this black box by improving the interpretability of PLMs. “If we can understand which components of a protein are important to its encoding through the language model, we can start to modify those protein sequences to get the properties that we want,“ said McWhite.

A Growing Universe of Custom Proteins

Nature uses a limited repertoire of theoretically possible proteins. “There's a big promise of protein language models to combine with synthetic data to expand the known functional space of protein sequences,” said McWhite. PLMs could tap that unrealized diversity. Additionally, “we can then bring that back to train PLMs to create an even more universal description of protein sequences.”

As PLMs get better, researchers might be able to play with more parameters to design proteins for every conceivable use case. Further, it could allow “being able to design multi-protein context or identifying novel types of regulations or genomic syntax,” said Hwang.

AI is poised to expand the universe of proteins, and their interactions, available to synthetic biologists. “We very rarely go through an era like this where there is a very promising technology that can fundamentally change a lot of different things,” said Wei.