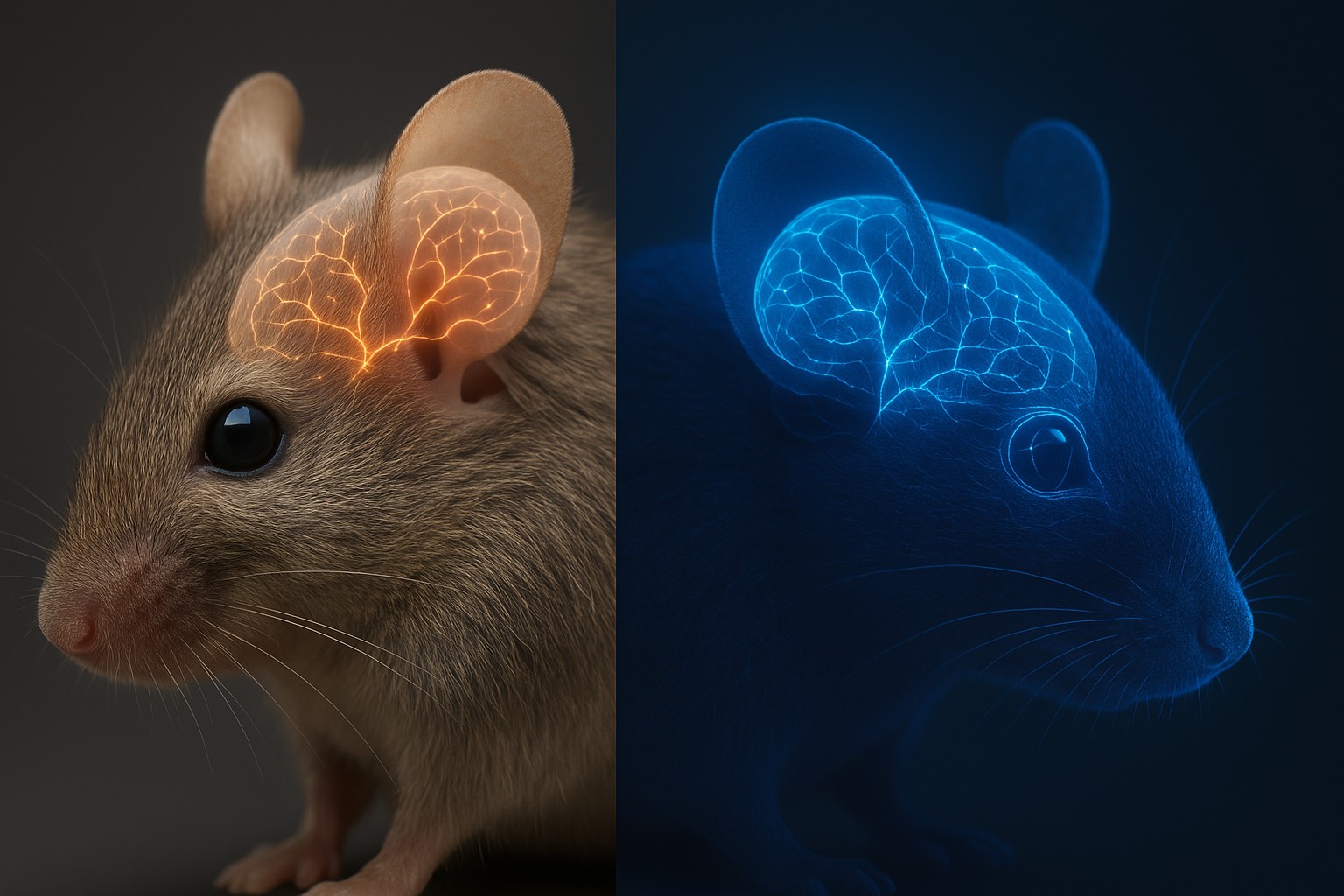

Digital Twins of Mouse Brains Could Rewrite Neuroscience

Imagine having the power to run millions of neuroscience experiments at once—all without ever lifting a scalpel. That’s the promise of a new artificial intelligence system developed by Stanford Medicine and its collaborators: a digital twin of the mouse visual cortex. Like a flight simulator for the brain, this AI model replicates how real neurons respond to visual stimuli, using data from live mice that watched action-packed movies.

Published recently in Nature, the study represents a significant leap in computational neuroscience. “If you build a model of the brain and it’s very accurate, that means you can do a lot more experiments,” said Andreas Tolias, PhD, Stanford professor of ophthalmology and senior author on the paper. “The ones that are the most promising you can then test in the real brain.”

This new tool doesn’t just mimic the brain’s responses—it actually predicts them. And unlike earlier attempts, it doesn’t crumble when shown something new.

Beyond the Training Set

Most previous AI models of the visual cortex were shackled to the data they were trained on, unable to generalize to new scenarios. This new digital twin changes that. It’s a type of foundation model—a class of powerful AI trained on large datasets capable of adapting to new tasks and unfamiliar inputs. Think ChatGPT, but for neuroscience.

“In many ways, the seed of intelligence is the ability to generalize robustly,” Tolias said. “The ultimate goal—the holy grail—is to generalize to scenarios outside your training distribution.”

The team achieved this by recording more than 900 minutes of brain activity from eight mice watching fast-moving human films—like Mad Max—designed to simulate the motion-rich environments mice might naturally experience. Why action flicks? Mice have blurry vision and respond strongly to motion, much like humans relying on peripheral vision. “Mice like movement, which strongly activates their visual system,” Tolias said.

The result was a deep dataset of visual stimuli and neural responses that formed the foundation for training. From this, the researchers could create a core model and then tailor it to each individual mouse with minimal extra data—creating personalized digital twins.

A New Lens on the Brain

These digital twins didn’t just reproduce known patterns of activity—they made accurate predictions about completely new videos and static images. Even more impressively, the model inferred the anatomical locations and cell types of thousands of neurons, as well as how those neurons connected to one another.

When the researchers compared these AI-generated predictions to electron microscope imagery from the same mice (part of the MICrONS project, also published recently in Nature), the results aligned with stunning precision.

This opens up vast new research possibilities. A digital twin isn’t constrained by biology—it doesn’t age, it doesn’t tire, and it can be tested endlessly. “Experiments that would take years could be completed in hours,” said Tolias. “We’re trying to open the black box, so to speak, to understand the brain at the level of individual neurons or populations of neurons and how they work together to encode information.”

In a companion study also published in Nature, the team used the model to uncover how neurons choose their synaptic partners. While it was known that similar neurons tend to connect, the digital twin revealed which similarities matter most. It turns out, neurons are more likely to link with those that respond to the same type of stimulus—like the color blue—than to those that happen to occupy the same patch of visual space.

“It’s like someone selecting friends based on what they like and not where they are,” Tolias said. “We learned this more precise rule of how the brain is organized.”

.svg)

.gif)